Misinformation Is Exploding

And not in a good way — AI is only partly to blame

And not in a good way — AI is only partly to blame

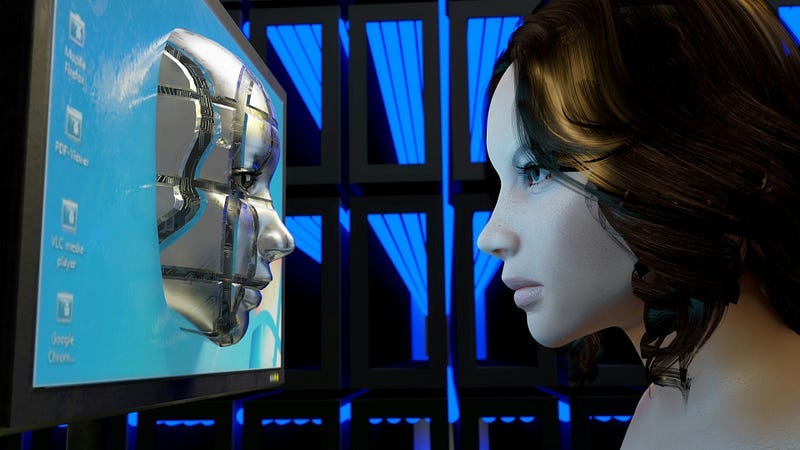

The internet has long been a cesspool of misinformation and now AI is making it much worse. As the quality of AI-generated writing improves and becomes more accessible, it is helping to destroy all trust in information.

When someone follows me on Medium I click on their profile. I am curious about what attracted them to my writing. I also want to know whether they have written anything I might be interested in reading. If so, I follow them back.

Some who follow me don’t really seem to have any interest in my writing. They appear to be following as many people as possible primarily for the purpose of attracting more followers themselves.

I find the tactic annoying but I don’t really have a problem with people trying to build a following however they can.

But a short time ago, I began to notice a new kind of follower. When I checked the profile of these followers, I saw that they were posting a dozen or more essays in a single day and seemed to have done so repeatedly.

My first thought was perhaps such people had a large backlog of writing they decided to bring to Medium all at once. But then, I recognized the obvious — these people were posting AI-generated essays.

Recently, Medium has taken steps to curtail AI-generated content. It’s hard to say how effective those steps have been. AI just keeps getting better at replicating human-written texts. I think I can still spot text that is obviously generated by AI, but now I wonder what I am not spotting.

I can easily imagine a situation — in the foreseeable future — when a flood of high quality AI-generated content on Medium crowds out content written by people.

Brute force sometimes defeats finesse. Quantity sometimes trumps quality.

What, if anything, can we do about it?

Look for signs of AI “hallucinations”

People who use AI to help them with writing often discover strange distortions of reality. AI chat tools seem to be prone to hallucination, creating information which does not map directly onto human-perceived reality.

Here is a simple example I discovered while doing some family history research online. Over the years, I have often tried to figure out the national origins of my family name, Stites. Stites is not a common name. It sounds like it could be English, but it might also be an Americanized version of something like Steitz or something else.

That is basically what The Dictionary of American Family Names says in its entry for Stites: “1. English: unexplained. This surname is no longer found in Britain. 2. Americanized version of German Steitz or some other similar (like-sounding) surname.”

I know, however, from my deep research on family history, that my ninth-great grandfather was a man named Henry Stich (or Stiche, English spelling was very inconsistent in the seventeenth century). Henry migrated from England to the Massachusetts Bay Colony in about 1647. He was an indentured worker (a charcoal maker, or collier) for the Hammersmith Iron Works near Salem.

Henry Stich did not come alone. He was accompanied by his son, Richard, also an indentured charcoal maker. But Richard ran away from Massachusetts to escape his indenture and debt. He landed in what was then Dutch territory, the township of Hempstead on Long Island. In the Hempstead records, Richard Stich became Richard Stites and the latter spelling stuck.

Very recently, I did another search on “Stites surname origin” and found a new source that seemed, at first glance, to be very authoritative and accurate. This source said that Stites was a medieval English name that has since disappeared from England and also, accurately I think, noted that earlier versions of the name might be spelled as Stich, Stitch, Styche, Styitch, Stych, or something similar.

There was a lot of other information on the page about the origins and meaning of the family name Stites. Most of that information seemed credible, though some of it was new to me and that made me a bit skeptical.

Then I scrolled down to the bottom of the page to a list of “Famous People with the Name Stites” and this is where things began to look decidedly fishy.

I did not recognize any of the names and that surprised me. After all, I consider myself something of an expert on all things Stites. I have been doing research on my Stites ancestry and family lines for nearly a decade.

One name was particularly surprising — “Max Stites: Professional bull rider.” My father’s name was Max. My brother and his son are also named Max. I had to find out more about this bull riding Max Stites.

When I did the search, I found not Max, but Will Stites, who is indeed a professional bull rider.

Now I was more skeptical of the list. The next name that caught my eye was “Michael Stites: Pulitzer Prize-winning historian.” I am aware of a prominent historian named Richard Stites. He wrote a lot of good books, but he never won a Pulitzer.

As I looked back at the complete list of names and did more searching, I came to the conclusion that every single listing was fake, a distortion of reality in a way that is typical of AI.

It makes sense that a company that wants to distribute information on tens of thousands of surnames would assign the content creation task to AI. But consumers need to be aware that AI reality is not human reality.

Ultimately, AI cannot be trusted to give us the facts.

Triangulate your sources

Triangulation is a way to avoid putting too much trust in any one source of information. It’s an old trick I learned in graduate school while studying cultural anthropology and learning to do ethnography.

Let’s say your task is to learn all you can about a typical diet for a Bushman family of hunters and gatherers in the Kalahari Desert (though the basic principle will be no different if your task is learning what a typical Tech Bro in San Francisco might eat for lunch).

You should not be satisfied after interviewing one Bushman no matter how much information they provide. You have to check your information against the information you get from interviewing as many other Bushman as possible. And don’t just talk to the men. Ask the women and children too.

Comparing and checking information obtained from multiple sources — that’s triangulation. It works for ethnography and it can work for identifying AI hallucinations and other forms of misinformation.

Know your ability to understand reality is limited

Reality, and therefore truth, is a matter of perception and interpretation. Keep this in mind when evaluating information (or misinformation) from any source.

One of my favorite old-school ethnographers, Clifford Geertz, spent much of his career elaborating and illustrating this point. Geertz is best known for the idea of “thick description,” the notion that culture (and therefore, cultural description/ethnography) is always multi-layered.

Cultures can be thought of as systems of information comprised of meaningful words, behaviors, signs, symbols, rituals, objects, relationships, and so on. Cultural actors manipulate meaningful components of culture to communicate, to confirm existing shared knowledge and meaning, and to generate new shared knowledge and meaning.

Geertz liked to say cultural description was like peeling the layers of an onion. Beneath each level of shared understanding among cultural actors there would always be another level of shared understanding perceived by those same cultural actors or by others.

But the ability of any one observer to uncover layers of cultural knowledge is limited and finite. In The Interpretation of Cultures, Geertz illustrated this point with following anecdote:

“There is an Indian story — at least I heard it as an Indian story — about an Englishman who, having been told that the world rested on a platform which rested on the back of an elephant which rested in turn on the back of a turtle, asked (perhaps he was an ethnographer; it is the way they behave), what did the turtle rest on? Another turtle. And that turtle? ‘Ah, Sahib, after that it is turtles all the way down”

What is true of the limits of the human observer is even more true of the limits of the AI observer.

I am inclined to think that AI inhabits a reality that mimics but does not participate in human systems of cultural interpretation. Perhaps this is why (current) AI so often misleads itself into conveying misinformation.

As difficult as it is for individual humans to separate fact from fantasy, it seems even more difficult (if not impossible) for AI to do so. Is this just a technical glitch than can be fixed? I honestly don’t know.

Recognize the real problem is not AI, it’s human

At times of my deepest despair over the havoc AI is creating in human knowledge systems, I sometimes hold out hope that AI will somehow cease being a problem and become part of the solution to misinformation.

Perhaps AI will learn to fact-check. Perhaps it already has.

But misinformation will continue to plague us with or without any contribution from AI. I am sure AI could be used to intentionally mislead people and that is a worry. But I am more worried about the people who generate misinformation for their own benefit and to harm others.

Whenever I write about the massive misinformation machine that is Donald Trump and his MAGA followers, I get a response from someone reminding me that all politician are liars, Democrats no less than Republicans. But that response is itself misinformation.

Of course, it is true that all politicians lie. But the scope and scale of the lies told by Donald Trump put him into a league of his own.

I could not bring myself to listen to Trump’s ninety-minute speech at the Republican National Convention. I read about it (from several different sources).

There was hope before the speech that Trump would change his tone in the wake of the recent attempt to assassinate him. He did start out well. He spoke of an end to violence and of the need for unity. But then he reverted to his usual “carnage” talk, rife with ridicule of his political opponents and full of spite and grievance. It was Trump as usual.

We can expect an explosion of misinformation from Trump and his followers over the next several months — years if we fail to stop him.

I can’t predict the future, but I hope there are enough Americans able to recognize and reject misinformation and vote against Trump.

If he is elected to the Presidency, AI hallucinations will be the least of our worries.